After discussing with Nilesh, came to know that if we defined Source Qualifier Data Type to varchar [instead of text, which comes by default when you pull clob source to an informatica mapping] it will load 4000+ character data without giving any Oracle Error.

To verify the same, I created new clob table as below

create table ClobTest

(id number,

clobData clob

);

I have inserted 4000+ character data to clobData column of ClobTest table. To know how to insert 4000+ characters into CLOB column please refer link http://gog

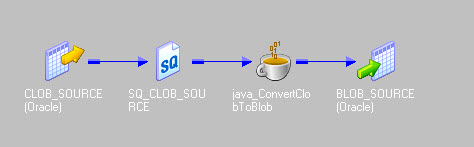

Now, I have imported ClobTest table to a mapping as below. In mapping my target will be FlatFile.

If you see, by default, informatica has taken data type as text with length / precision as 4000.

When I ran the mapping workflow executed successfully and inserted 4000 characters to target text file.

Now, error occurred at office might be because of Informatica or Oracle version which I need to confirm. Here, I am using Informatica 9.0.1 Hot Fix and Oracle 10.2.0 Standard Edition.

Will verify it soon..

To verify the same, I created new clob table as below

create table ClobTest

(id number,

clobData clob

);

I have inserted 4000+ character data to clobData column of ClobTest table. To know how to insert 4000+ characters into CLOB column please refer link http://gog

Now, I have imported ClobTest table to a mapping as below. In mapping my target will be FlatFile.

If you see, by default, informatica has taken data type as text with length / precision as 4000.

When I ran the mapping workflow executed successfully and inserted 4000 characters to target text file.

Now, error occurred at office might be because of Informatica or Oracle version which I need to confirm. Here, I am using Informatica 9.0.1 Hot Fix and Oracle 10.2.0 Standard Edition.

Will verify it soon..