Monday, December 19, 2011

Monday, December 5, 2011

XML Part - II

This is extension to link http://gogates.blogspot.com/2011/09/xml-source.html

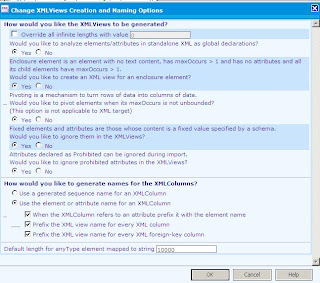

We will first see the difference between the options we get while importing the XML definition.

Our XML source is like below taken from link -- http://www.w3schools.com/xml/xml_attributes.asp

<note>

<date>

<day>10</day>

<month>01</month>

<year>2008</year>

</date>

<to>Tove</to>

<from>Jani</from>

<heading>Reminder</heading>

<body>Don't forget me this weekend!</body>

</note>

We used 2 options as seen in the screenshot

This is a first option as

This is a second option as

Basically it changes the xml source node column name. Please find the below difference of both cases

If you see, there is a difference in primary key name and main node name. Second has same name as of xml definition whereas first has name generated of its own.

We will first see the difference between the options we get while importing the XML definition.

Our XML source is like below taken from link -- http://www.w3schools.com/xml/xml_attributes.asp

<note>

<date>

<day>10</day>

<month>01</month>

<year>2008</year>

</date>

<to>Tove</to>

<from>Jani</from>

<heading>Reminder</heading>

<body>Don't forget me this weekend!</body>

</note>

We used 2 options as seen in the screenshot

This is a first option as

This is a second option as

Basically it changes the xml source node column name. Please find the below difference of both cases

If you see, there is a difference in primary key name and main node name. Second has same name as of xml definition whereas first has name generated of its own.

Friday, December 2, 2011

Map and Session Relation

The relation between a Mapping and a Session is like a relationship between a groom and bride of a hindu family. As in Hindu family, unless you gave divorse to your privious wife or husband, you can not do another merriage, just like that unless you delete a map, you can not assign new map to particular session :) Yes re-namming the map will not do ;-)

We create a map say Map1 and create a session say Session1 and we assign Map1 to Session1 while creating it.

Scenario which I had faced.

Now, I have to do some new development at Map1, but before doing it, I took a copy of it say Map2 in same folder. I completed my development at Map1, but at the end of it, client said ealier thing was fine and he wants that only.

Now, I have Map2 as my original map and Map1 which I have edited. As I did number of changes, I thought its better to use Map2 instead of Map1. So, I renamed Map1 to Map1_Edited and Map2 to Map1.

I ran the session Session1 and surprizingly it got failed. I checked for the root cause, and when I saw it was running Map1_Edited instead of Map1.

This means, session will stick to its firstly bonded map eventhough you change its name.

How to tackle this?

Now the option I had is to do changes in Map1_Edited itself, but changes were so big that it was a difficult task. I planed that, I will rename Map1 to Map1_Old and re-import Map1_Edited as Map1. So, I renamed Map1 to Map1_Old and exported both Map1_Old and Map1_Edited.

Now my correct map was Map1_Old which I renamed (I renamed the xml of it) and tried to import it. But at the time of import, it showed me its old name as Map1_Old

Why ?

Thing is, when you rename the map xml, it did not actually renames its Map name but only the xml name.

How?

To rename its actual name, edit the xml and search for

We create a map say Map1 and create a session say Session1 and we assign Map1 to Session1 while creating it.

Scenario which I had faced.

Now, I have to do some new development at Map1, but before doing it, I took a copy of it say Map2 in same folder. I completed my development at Map1, but at the end of it, client said ealier thing was fine and he wants that only.

Now, I have Map2 as my original map and Map1 which I have edited. As I did number of changes, I thought its better to use Map2 instead of Map1. So, I renamed Map1 to Map1_Edited and Map2 to Map1.

I ran the session Session1 and surprizingly it got failed. I checked for the root cause, and when I saw it was running Map1_Edited instead of Map1.

This means, session will stick to its firstly bonded map eventhough you change its name.

How to tackle this?

Now the option I had is to do changes in Map1_Edited itself, but changes were so big that it was a difficult task. I planed that, I will rename Map1 to Map1_Old and re-import Map1_Edited as Map1. So, I renamed Map1 to Map1_Old and exported both Map1_Old and Map1_Edited.

Now my correct map was Map1_Old which I renamed (I renamed the xml of it) and tried to import it. But at the time of import, it showed me its old name as Map1_Old

Why ?

Thing is, when you rename the map xml, it did not actually renames its Map name but only the xml name.

How?

To rename its actual name, edit the xml and search for

Saturday, November 26, 2011

Basic Materialized View !!

Because of some reason, yesterday was re-creating / arranging my resume again. I was amazed when I came across certification section. Between Jan 2007 to Sep 2009 , in 2 years I had given SCJP, Oracle SQL, SCWCD, ITIL, , Oracle Pl-SQL, Oracle Fundamental-1, and lastly Oracle Fundamental - II, and the thing by which I amazed is, in year 2010 and 2011 I have not done any. :-( Reason was simple, I became satisfied, which kept me apart from learning and achieving more.

Today, went at Zensar Circle again. Met Sir (Ashish Jaiswal) and Sikku Bhai (Sikandar Shaikh), sir explained some things related to Partition Table and as always Sikku Bhai told, its 10 months over now, looking for job change..:) With sir's 30min lecture on Partition table and backup, I got motivated and thought about old days. Around 1, 1.5 years back, had tried number of things on Materialized view, and thought will do something on that before I go to bed...

taking the stuff from below link of "data warehousing guide 10g"-- give this words as search at google.

http://docs.oracle.com/cd/B19306_01/server.102/b14223.pdf

The basic difference I saw in data modeling of Oracle and Teradata is, Oracle advice to create Start Or SnowFlex schema structure and Teradata prefers 3NF. We do not have to bother about advances and dis-advances, both gives us job and money, so both are good.

Oracle uses Bit Map indexes which are mostly created on Fact tables. Below stuff is simply copied from page number 96 [6 - 2] of above link.

"The advantages of using bitmap indexes are greatest for columns in which the ratio of

the number of distinct values to the number of rows in the table is small. We refer to

this ratio as the degree of cardinality. A gender column, which has only two distinct

values (male and female), is optimal for a bitmap index. However, data warehouse

administrators also build bitmap indexes on columns with higher cardinalities.

For example, on a table with one million rows, a column with 10,000 distinct values is

a candidate for a bitmap index. A bitmap index on this column can outperform a

B-tree index, particularly when this column is often queried in conjunction with other

indexed columns. In fact, in a typical data warehouse environments, a bitmap index

can be considered for any non-unique column.

B-tree indexes are most effective for high-cardinality data: that is, for data with many

possible values, such as customer_name or phone_number. In a data warehouse,

B-tree indexes should be used only for unique columns or other columns with very

high cardinalities (that is, columns that are almost unique). The majority of indexes in

a data warehouse should be bitmap indexes.

In ad hoc queries and similar situations, bitmap indexes can dramatically improve

query performance. AND and OR conditions in the WHERE clause of a query can be

resolved quickly by performing the corresponding Boolean operations directly on the

bitmaps before converting the resulting bitmap to rowids. If the resulting number of

rows is small, the query can be answered quickly without resorting to a full table scan." I do not know, why in my old project not a single index was bit-map, thought the structure was Snow Flex..

Lets start with Materialized View now....

What is Materialized View and Normal View ?

Materiazlied view is just like normal view, only thing is it eats its own space at DB which normal view do not. Materialized view keeps its data at its own space wherein normal view always fetches the data from its base tables. Because of Query-Re-write, thought you fire select query on base tables, oracle will fetch the data from materialized view[ If its defined on base tables] and many more...we are not preparing for interview here, so its not important to find out anything more. If needed, go through Basic MV chapter from this book.

Creating materialized view using DB Link, i.e. base tables are in another db, and your MV is in different one -- I had tried it earlier and it was worked that time.. While reading this book came across the line

"If a materialized view is to be used by query rewrite, it must be stored in the same database as the detail tables on which it relies".. this means you can create it, but query re-write will not work, Will try this at end as I do not have another db created at home. Seems Query Re-Write will not work if we are using db link at select query of materialized view, need to check.

Note:- Only thing by which you can not define MV is

"You cannot, however, define a materialized view with a subquery in the

SELECT list of the defining query. You can, however, include subqueries elsewhere in

the defining query, such as in the WHERE clause."

e.g. Query should not be like

select id, name, (select address from myTable), age

from employee

This will not work... All tables has to be at where clause or from clause.

As per book there are 3 types of MVs

■ Materialized Views with Aggregates

■ Materialized Views Containing Only Joins

■ Nested Materialized Views

Some thing imp to read before we start creating MV --

If you specify REFRESH FAST, Oracle performs further verification of the query

definition to ensure that fast refresh can be performed if any of the detail tables

change. These additional checks are:

■ A materialized view log must be present for each detail table unless the table

supports PCT. Also, when a materialized view log is required, the ROWID column

must be present in each materialized view log.

■ The rowids of all the detail tables must appear in the SELECT list of the

materialized view query definition.

If some of these restrictions are not met, you can create the materialized view as

REFRESH FORCE to take advantage of fast refresh when it is possible. If one of the

tables did not meet all of the criteria, but the other tables did, the materialized view

would still be fast refreshable with respect to the other tables for which all the criteria

are met.

Materialized Join Views FROM Clause Considerations

If the materialized view contains only joins, the ROWID columns for each table (and

each instance of a table that occurs multiple times in the FROM list) must be present in

the SELECT list of the materialized view.

If the materialized view has remote tables in the FROM clause, all tables in the FROM

clause must be located on that same site. Further, ON COMMIT refresh is not supported

for materialized view with remote tables. Materialized view logs must be present on

the remote site for each detail table of the materialized view and ROWID columns must

be present in the SELECT list of the materialized view, as shown in the following

example.

CREATE MATERIALIZED VIEW LOG ON sales WITH ROWID;

CREATE MATERIALIZED VIEW LOG ON times WITH ROWID;

CREATE MATERIALIZED VIEW LOG ON customers WITH ROWID;

CREATE MATERIALIZED VIEW detail_sales_mv

PARALLEL BUILD IMMEDIATE

REFRESH FAST AS

SELECT s.rowid "sales_rid", t.rowid "times_rid", c.rowid "customers_rid",

c.cust_id, c.cust_last_name, s.amount_sold, s.quantity_sold, s.time_id

FROM sales s, times t, customers c

WHERE s.cust_id = c.cust_id(+) AND s.time_id = t.time_id(+);

Alternatively, if the previous example did not include the columns times_rid and

customers_rid, and if the refresh method was REFRESH FORCE, then this

materialized view would be fast refreshable only if the sales table was updated but not

if the tables times or customers were updated.

CREATE MATERIALIZED VIEW detail_sales_mv

PARALLEL

BUILD IMMEDIATE

REFRESH FORCE AS

SELECT s.rowid "sales_rid", c.cust_id, c.cust_last_name, s.amount_sold,

s.quantity_sold, s.time_id

FROM sales s, times t, customers c

WHERE s.cust_id = c.cust_id(+) AND s.time_id = t.time_id(+);

You can create a nested materialized view on materialized views, but all parent and

base materialized views must contain joins or aggregates. If the defining queries for a

materialized view do not contain joins or aggregates, it cannot be nested. All the

underlying objects (materialized views or tables) on which the materialized view is

defined must have a materialized view log. All the underlying objects are treated as if

they were tables. In addition, you can use all the existing options for materialized

views.

Using the tables and their columns from the sh sample schema, the following

materialized views illustrate how nested materialized views can be created.

CREATE MATERIALIZED VIEW LOG ON sales WITH ROWID;

CREATE MATERIALIZED VIEW LOG ON customers WITH ROWID;

CREATE MATERIALIZED VIEW LOG ON times WITH ROWID;

/*create materialized view join_sales_cust_time as fast refreshable at

COMMIT time */

CREATE MATERIALIZED VIEW join_sales_cust_time

REFRESH FAST ON COMMIT AS

SELECT c.cust_id, c.cust_last_name, s.amount_sold, t.time_id,

t.day_number_in_week, s.rowid srid, t.rowid trid, c.rowid crid

FROM sales s, customers c, times t

WHERE s.time_id = t.time_id AND s.cust_id = c.cust_id;

To create a nested materialized view on the table join_sales_cust_time, you

would have to create a materialized view log on the table. Because this will be a

single-table aggregate materialized view on join_sales_cust_time, you need to

log all the necessary columns and use the INCLUDING NEW VALUES clause.

/* create materialized view log on join_sales_cust_time */

CREATE MATERIALIZED VIEW LOG ON join_sales_cust_time

WITH ROWID (cust_last_name, day_number_in_week, amount_sold)

INCLUDING NEW VALUES;

/* create the single-table aggregate materialized view sum_sales_cust_time

on join_sales_cust_time as fast refreshable at COMMIT time */

CREATE MATERIALIZED VIEW sum_sales_cust_time

REFRESH FAST ON COMMIT AS

SELECT COUNT(*) cnt_all, SUM(amount_sold) sum_sales, COUNT(amount_sold)

cnt_sales, cust_last_name, day_number_in_week

FROM join_sales_cust_time

GROUP BY cust_last_name, day_number_in_week;

Nesting Materialized Views with Joins and Aggregates

Some types of nested materialized views cannot be fast refreshed. Use EXPLAIN_

MVIEW to identify those types of materialized views. You can refresh a tree of nested

materialized views in the appropriate dependency order by specifying the nested =

TRUE parameter with the DBMS_MVIEW.REFRESH parameter. For example, if you call

DBMS_MVIEW.REFRESH ('SUM_SALES_CUST_TIME', nested => TRUE), the

REFRESH procedure will first refresh the join_sales_cust_time materialized

view, and then refresh the sum_sales_cust_time materialized view.

================ First MV =======================

CREATE MATERIALIZED VIEW cust_sales_mv

PCTFREE 0 TABLESPACE demo

STORAGE (INITIAL 16k NEXT 16k PCTINCREASE 0)

PARALLEL

BUILD IMMEDIATE

REFRESH COMPLETE

ENABLE QUERY REWRITE AS

select sum(salary),count(emp.department_id), department_name

from hr.employees emp, hr.departments dep

where emp.department_id = dep.department_id

group by department_name

I fired above query, on hr schema and got an error

"insufficient privileges"

So, logged in as SYS, fired again and got an error

"table space demo does not exists"

What table space do sys user/schema has ?

Checked it at

select *

from DBA_TABLESPACES

or

CREATE MATERIALIZED VIEW cust_sales_mv

PCTFREE 0 TABLESPACE users--demo

STORAGE (INITIAL 16k NEXT 16k PCTINCREASE 0)

PARALLEL

BUILD IMMEDIATE

REFRESH COMPLETE

ENABLE QUERY REWRITE AS

select sum(salary),count(emp.department_id), department_name

from hr.employees emp, hr.departments dep

where emp.department_id = dep.department_id

group by department_name

MV got created in 10 sec.

Fired select statement of MV and checked the Explain plan

It seems, Query re-wite worked and now data is getting fetched from MV and not from Base Tables.

Today, went at Zensar Circle again. Met Sir (Ashish Jaiswal) and Sikku Bhai (Sikandar Shaikh), sir explained some things related to Partition Table and as always Sikku Bhai told, its 10 months over now, looking for job change..:) With sir's 30min lecture on Partition table and backup, I got motivated and thought about old days. Around 1, 1.5 years back, had tried number of things on Materialized view, and thought will do something on that before I go to bed...

taking the stuff from below link of "data warehousing guide 10g"-- give this words as search at google.

http://docs.oracle.com/cd/B19306_01/server.102/b14223.pdf

The basic difference I saw in data modeling of Oracle and Teradata is, Oracle advice to create Start Or SnowFlex schema structure and Teradata prefers 3NF. We do not have to bother about advances and dis-advances, both gives us job and money, so both are good.

Oracle uses Bit Map indexes which are mostly created on Fact tables. Below stuff is simply copied from page number 96 [6 - 2] of above link.

"The advantages of using bitmap indexes are greatest for columns in which the ratio of

the number of distinct values to the number of rows in the table is small. We refer to

this ratio as the degree of cardinality. A gender column, which has only two distinct

values (male and female), is optimal for a bitmap index. However, data warehouse

administrators also build bitmap indexes on columns with higher cardinalities.

For example, on a table with one million rows, a column with 10,000 distinct values is

a candidate for a bitmap index. A bitmap index on this column can outperform a

B-tree index, particularly when this column is often queried in conjunction with other

indexed columns. In fact, in a typical data warehouse environments, a bitmap index

can be considered for any non-unique column.

B-tree indexes are most effective for high-cardinality data: that is, for data with many

possible values, such as customer_name or phone_number. In a data warehouse,

B-tree indexes should be used only for unique columns or other columns with very

high cardinalities (that is, columns that are almost unique). The majority of indexes in

a data warehouse should be bitmap indexes.

In ad hoc queries and similar situations, bitmap indexes can dramatically improve

query performance. AND and OR conditions in the WHERE clause of a query can be

resolved quickly by performing the corresponding Boolean operations directly on the

bitmaps before converting the resulting bitmap to rowids. If the resulting number of

rows is small, the query can be answered quickly without resorting to a full table scan." I do not know, why in my old project not a single index was bit-map, thought the structure was Snow Flex..

Lets start with Materialized View now....

What is Materialized View and Normal View ?

Materiazlied view is just like normal view, only thing is it eats its own space at DB which normal view do not. Materialized view keeps its data at its own space wherein normal view always fetches the data from its base tables. Because of Query-Re-write, thought you fire select query on base tables, oracle will fetch the data from materialized view[ If its defined on base tables] and many more...we are not preparing for interview here, so its not important to find out anything more. If needed, go through Basic MV chapter from this book.

Creating materialized view using DB Link, i.e. base tables are in another db, and your MV is in different one -- I had tried it earlier and it was worked that time.. While reading this book came across the line

"If a materialized view is to be used by query rewrite, it must be stored in the same database as the detail tables on which it relies".. this means you can create it, but query re-write will not work, Will try this at end as I do not have another db created at home. Seems Query Re-Write will not work if we are using db link at select query of materialized view, need to check.

Note:- Only thing by which you can not define MV is

"You cannot, however, define a materialized view with a subquery in the

SELECT list of the defining query. You can, however, include subqueries elsewhere in

the defining query, such as in the WHERE clause."

e.g. Query should not be like

select id, name, (select address from myTable), age

from employee

This will not work... All tables has to be at where clause or from clause.

As per book there are 3 types of MVs

■ Materialized Views with Aggregates

■ Materialized Views Containing Only Joins

■ Nested Materialized Views

Some thing imp to read before we start creating MV --

If you specify REFRESH FAST, Oracle performs further verification of the query

definition to ensure that fast refresh can be performed if any of the detail tables

change. These additional checks are:

■ A materialized view log must be present for each detail table unless the table

supports PCT. Also, when a materialized view log is required, the ROWID column

must be present in each materialized view log.

■ The rowids of all the detail tables must appear in the SELECT list of the

materialized view query definition.

If some of these restrictions are not met, you can create the materialized view as

REFRESH FORCE to take advantage of fast refresh when it is possible. If one of the

tables did not meet all of the criteria, but the other tables did, the materialized view

would still be fast refreshable with respect to the other tables for which all the criteria

are met.

Materialized Join Views FROM Clause Considerations

If the materialized view contains only joins, the ROWID columns for each table (and

each instance of a table that occurs multiple times in the FROM list) must be present in

the SELECT list of the materialized view.

If the materialized view has remote tables in the FROM clause, all tables in the FROM

clause must be located on that same site. Further, ON COMMIT refresh is not supported

for materialized view with remote tables. Materialized view logs must be present on

the remote site for each detail table of the materialized view and ROWID columns must

be present in the SELECT list of the materialized view, as shown in the following

example.

CREATE MATERIALIZED VIEW LOG ON sales WITH ROWID;

CREATE MATERIALIZED VIEW LOG ON times WITH ROWID;

CREATE MATERIALIZED VIEW LOG ON customers WITH ROWID;

CREATE MATERIALIZED VIEW detail_sales_mv

PARALLEL BUILD IMMEDIATE

REFRESH FAST AS

SELECT s.rowid "sales_rid", t.rowid "times_rid", c.rowid "customers_rid",

c.cust_id, c.cust_last_name, s.amount_sold, s.quantity_sold, s.time_id

FROM sales s, times t, customers c

WHERE s.cust_id = c.cust_id(+) AND s.time_id = t.time_id(+);

Alternatively, if the previous example did not include the columns times_rid and

customers_rid, and if the refresh method was REFRESH FORCE, then this

materialized view would be fast refreshable only if the sales table was updated but not

if the tables times or customers were updated.

CREATE MATERIALIZED VIEW detail_sales_mv

PARALLEL

BUILD IMMEDIATE

REFRESH FORCE AS

SELECT s.rowid "sales_rid", c.cust_id, c.cust_last_name, s.amount_sold,

s.quantity_sold, s.time_id

FROM sales s, times t, customers c

WHERE s.cust_id = c.cust_id(+) AND s.time_id = t.time_id(+);

You can create a nested materialized view on materialized views, but all parent and

base materialized views must contain joins or aggregates. If the defining queries for a

materialized view do not contain joins or aggregates, it cannot be nested. All the

underlying objects (materialized views or tables) on which the materialized view is

defined must have a materialized view log. All the underlying objects are treated as if

they were tables. In addition, you can use all the existing options for materialized

views.

Using the tables and their columns from the sh sample schema, the following

materialized views illustrate how nested materialized views can be created.

CREATE MATERIALIZED VIEW LOG ON sales WITH ROWID;

CREATE MATERIALIZED VIEW LOG ON customers WITH ROWID;

CREATE MATERIALIZED VIEW LOG ON times WITH ROWID;

/*create materialized view join_sales_cust_time as fast refreshable at

COMMIT time */

CREATE MATERIALIZED VIEW join_sales_cust_time

REFRESH FAST ON COMMIT AS

SELECT c.cust_id, c.cust_last_name, s.amount_sold, t.time_id,

t.day_number_in_week, s.rowid srid, t.rowid trid, c.rowid crid

FROM sales s, customers c, times t

WHERE s.time_id = t.time_id AND s.cust_id = c.cust_id;

To create a nested materialized view on the table join_sales_cust_time, you

would have to create a materialized view log on the table. Because this will be a

single-table aggregate materialized view on join_sales_cust_time, you need to

log all the necessary columns and use the INCLUDING NEW VALUES clause.

/* create materialized view log on join_sales_cust_time */

CREATE MATERIALIZED VIEW LOG ON join_sales_cust_time

WITH ROWID (cust_last_name, day_number_in_week, amount_sold)

INCLUDING NEW VALUES;

/* create the single-table aggregate materialized view sum_sales_cust_time

on join_sales_cust_time as fast refreshable at COMMIT time */

CREATE MATERIALIZED VIEW sum_sales_cust_time

REFRESH FAST ON COMMIT AS

SELECT COUNT(*) cnt_all, SUM(amount_sold) sum_sales, COUNT(amount_sold)

cnt_sales, cust_last_name, day_number_in_week

FROM join_sales_cust_time

GROUP BY cust_last_name, day_number_in_week;

Nesting Materialized Views with Joins and Aggregates

Some types of nested materialized views cannot be fast refreshed. Use EXPLAIN_

MVIEW to identify those types of materialized views. You can refresh a tree of nested

materialized views in the appropriate dependency order by specifying the nested =

TRUE parameter with the DBMS_MVIEW.REFRESH parameter. For example, if you call

DBMS_MVIEW.REFRESH ('SUM_SALES_CUST_TIME', nested => TRUE), the

REFRESH procedure will first refresh the join_sales_cust_time materialized

view, and then refresh the sum_sales_cust_time materialized view.

================ First MV =======================

CREATE MATERIALIZED VIEW cust_sales_mv

PCTFREE 0 TABLESPACE demo

STORAGE (INITIAL 16k NEXT 16k PCTINCREASE 0)

PARALLEL

BUILD IMMEDIATE

REFRESH COMPLETE

ENABLE QUERY REWRITE AS

select sum(salary),count(emp.department_id), department_name

from hr.employees emp, hr.departments dep

where emp.department_id = dep.department_id

group by department_name

I fired above query, on hr schema and got an error

"insufficient privileges"

So, logged in as SYS, fired again and got an error

"table space demo does not exists"

What table space do sys user/schema has ?

Checked it at

select *

from DBA_TABLESPACES

or

-

USER_TABLESPACES

-

V$TABLESPACE

CREATE MATERIALIZED VIEW cust_sales_mv

PCTFREE 0 TABLESPACE users--demo

STORAGE (INITIAL 16k NEXT 16k PCTINCREASE 0)

PARALLEL

BUILD IMMEDIATE

REFRESH COMPLETE

ENABLE QUERY REWRITE AS

select sum(salary),count(emp.department_id), department_name

from hr.employees emp, hr.departments dep

where emp.department_id = dep.department_id

group by department_name

MV got created in 10 sec.

Fired select statement of MV and checked the Explain plan

It seems, Query re-wite worked and now data is getting fetched from MV and not from Base Tables.

Tuesday, November 22, 2011

connect by level !!

Every day teaches you something new,,, only thing is, you should keep your eyes open and ears alert.

While working on INC today, got something new like below - which I had never thought of.

select to_date(startDate,'dd-mon-yyyy') - 1 + level

from dual

connect by level <= (to_date(endDate,'dd-mon-yyyy')+1) - to_date(startDate,'dd-mon-yyyy')

from dual

connect by level <= (to_date(endDate,'dd-mon-yyyy')+1) - to_date(startDate,'dd-mon-yyyy')

Scenario

was, start date and end date are 2 IN parameters for my proc. Using

those, they were generating date ranges. Lets say, you gave start date

as 1-Nov-2011 and end date as 10-Nov-2011, So the query will generate 10

rows with 1st to 10 Nov as data.

As in image, query will generate the result.

How will it work?

Seems, there is a keyword connect by level just as connect by prior.

select level

from dual

connect by level <= 10 ;

When you fire above statement, it will

generate records with 1 to 10 numbers.

Same logic they have used in generating Date Range series. Level they have creating

by subtracting End Date and Start Date and simply added level to start date. As

keeps on increase at every iteration, date gets increase along with it.

You can get more information at google, but following like I like most

http://www.sqlsnippets.com/en/topic-11821.html

They query you can use for date series preparation is

select to_date('1-Nov-2011','dd-mon-yyyy') -1 + level

from dual

connect by level <= (to_date('9-Nov-2011','dd-mon-yyyy')+1) - to_date('1-Nov-2011','dd-mon-yyyy')

Sunday, November 13, 2011

How do they do it - Part - 1

It may be very trivial or unimportant thing to most, but for me, it was a dream come true. I observed most of the people who do not work on HTML / UI Development, are really not interested in how do they create this UI, but not sure how, I am very fond of it.

After hearing much about jQuery and CSS, and observing www.bbc.com and www.google.org, I was wondering, how they have developed this site.

Just simply open these sites from FireFox browser - if you open those from IE you can see the html code but won't able to see the css behind those.

To start with, we will first see the www.bbc.com, which is full of css3 and jQuery. Image Rotation, Panels and number of things are with jQuery and definetly of css.

Here, we see how the right pan of bbc.com is made of...that is, when you scroll your mouse, numbering gets lighten.

Below is the basic code without applying css and it looks like below --

<html>

<div id="mostPopular_tabset_watched" class="selected panel">

<ul class="blq-clearfix">

<li class="ol0 first-child">

<a rev="mostpopular|homepage|na|r|t|i|text|content" href="http://www.bbc.co.uk/news/world-us-canada-15319106"><span class="livestats-icon livestats-1">1: </span>Mandar</a> </li>

<li class="ol1">

<a rev="mostpopular|homepage|na|r|t|i|text|content" href="http://www.bbc.co.uk/2/hi/8148527.stm"><span class="livestats-icon livestats-2">2: </span>Korea plane lands on its tail</a> </li>

<li class="ol2">

<a rev="mostpopular|homepage|na|r|t|i|text|content" href="http://www.bbc.co.uk/2/hi/asia-pacific/7874393.stm"><span class="livestats-icon livestats-3">3: </span>Australian pilot does a 'Hudson'</a> </li>

<li class="ol3">

<a rev="mostpopular|homepage|na|r|t|i|text|content" href="http://www.bbc.co.uk/2/hi/programmes/click_online/9615908.stm"><span class="livestats-icon livestats-4">4: </span>Ceatec - pushing a touch screen's buttons</a> </li>

<li class="ol4">

<a rev="mostpopular|homepage|na|r|t|i|text|content" href="http://www.bbc.co.uk/news/video_and_audio/"><span class="livestats-icon livestats-5">5: </span>One-minute World News</a> </li>

</ul>

</div>

Wednesday, October 26, 2011

Viewing Old Map Version at Informatica....

If you are relying on Infomatica's versioning functionality - dependance is poor death. Had a very bad experience .. please read below...

Not sure, what was the day, most of the ppl were not in office. Got a new QC and RK assigned that to me. To start with exported the workflow and before importing to ravi's folder, just confirmed with him if someone else is using or not. After his Yes, imported workflow, and started working on new qc.

Process is, we have write access to user and poroject fodler but not for qa and prod. We need to export workflows from qa and import those to user folder. Have to complete development and testing at User folder and after UAT sign-off, we migrate code to project folder. From Project -->> QA -->> Prod pada will take care the migration process. PADA is a tool for migration.

Till 5, completed the 10% of work, went for the tea, came up by 5:30; that time got a ping from Sergey that when he tries to do testing for scenario, mapping is getting failed. Thought, my qc is not so urgent, so will help sergey to resolve the issue.

Went to feed, and checked from which folder his is running the mapping and felt like standing alone in desert, no water, and close to dye. :-(

Yes, I had imported my new code, where Mansi had did his development. I had replaced my all overlapping targets, sources, maps, tranformations in ravi's folder where mansi's code was there for UAT. All got messed now, and map got invalidated and because of which Sergey could not test the things.

Informed him that we have some issues at informatica level and trying to resolve those. As this situation was quite new to me, I asked help to dada (abhishek sengupta).

Below are the things we tried...

1. Checked for the versions, that what all objects got affected. But it was very hard to find it out, as numberof maps and source/targets were involved.

2. So, decided to go one by one with maps, which are affected. Took first map, checked the version..from the comment which I gave while importing, I could easy find out the affect maps.

3. To get the old version, I right click on respective version and click on Open In Workspace, and that particular map version got opened.

4. For first time, I felt my ass got saved, and I can re-commit this version, but when I checked the targets, it was still showing as latest version, it was not same as the map version.

5. I checked if really old target version is present at informatica, and went to targets and checked the versioning, yes that version was present,

6. I checked the dependancies for old target, it showed me map of that version.

7. I opened that map in workspace again, but no luck, again target was of latest version :-(..

This is a dam bug in informatica, that it shows everything of old version except the targets and because of which though you could see rest old part, its of no use.

Below is a replica of such scenario...

1. I am reducing the target table ports in my project folder map; keep in mind - only when your ports gets reduced, it will really affect the map as original ports will get dis-connected. When you add new ports, it will not affect the map.

2. Refresh the affected map, where this target got used.

3. Export the map and import it at QA folder. Replace all objects, so now QA will have also one target for which one port got deleted.

4. Check in all objects which you have newly imported to QA folder.

5. Now, right click on map and click on versioning --> View History

When you click on view history, new window will appar which will display you the total number versions exists till now.

When you click on view history, new window will appar which will display you the total number versions exists till now.

Here, all things work fine - means you will be able to view all objects in a map which belogs to that old verison, except target definition. This is a bug of informatica, that it wont show Target of that particular version but the target is always of latest version.

So, suppose you in-intentionally or un-knowingly copied your map or done some changes in map of a particular folder, and if the older version of that map got updated. Then you can view/get your old map iff the target defination is not changed.

If you have done some changes at target definition like added more columns, connected more ports to target level etc.. then there is no way to get your old version back, and you need to do your development again for earlier version.

Not sure, what was the day, most of the ppl were not in office. Got a new QC and RK assigned that to me. To start with exported the workflow and before importing to ravi's folder, just confirmed with him if someone else is using or not. After his Yes, imported workflow, and started working on new qc.

Process is, we have write access to user and poroject fodler but not for qa and prod. We need to export workflows from qa and import those to user folder. Have to complete development and testing at User folder and after UAT sign-off, we migrate code to project folder. From Project -->> QA -->> Prod pada will take care the migration process. PADA is a tool for migration.

Till 5, completed the 10% of work, went for the tea, came up by 5:30; that time got a ping from Sergey that when he tries to do testing for scenario, mapping is getting failed. Thought, my qc is not so urgent, so will help sergey to resolve the issue.

Went to feed, and checked from which folder his is running the mapping and felt like standing alone in desert, no water, and close to dye. :-(

Yes, I had imported my new code, where Mansi had did his development. I had replaced my all overlapping targets, sources, maps, tranformations in ravi's folder where mansi's code was there for UAT. All got messed now, and map got invalidated and because of which Sergey could not test the things.

Informed him that we have some issues at informatica level and trying to resolve those. As this situation was quite new to me, I asked help to dada (abhishek sengupta).

Below are the things we tried...

1. Checked for the versions, that what all objects got affected. But it was very hard to find it out, as numberof maps and source/targets were involved.

2. So, decided to go one by one with maps, which are affected. Took first map, checked the version..from the comment which I gave while importing, I could easy find out the affect maps.

3. To get the old version, I right click on respective version and click on Open In Workspace, and that particular map version got opened.

4. For first time, I felt my ass got saved, and I can re-commit this version, but when I checked the targets, it was still showing as latest version, it was not same as the map version.

5. I checked if really old target version is present at informatica, and went to targets and checked the versioning, yes that version was present,

6. I checked the dependancies for old target, it showed me map of that version.

7. I opened that map in workspace again, but no luck, again target was of latest version :-(..

This is a dam bug in informatica, that it shows everything of old version except the targets and because of which though you could see rest old part, its of no use.

Below is a replica of such scenario...

1. I am reducing the target table ports in my project folder map; keep in mind - only when your ports gets reduced, it will really affect the map as original ports will get dis-connected. When you add new ports, it will not affect the map.

2. Refresh the affected map, where this target got used.

3. Export the map and import it at QA folder. Replace all objects, so now QA will have also one target for which one port got deleted.

4. Check in all objects which you have newly imported to QA folder.

5. Now, right click on map and click on versioning --> View History

When you click on view history, new window will appar which will display you the total number versions exists till now.

When you click on view history, new window will appar which will display you the total number versions exists till now.

6. Just again right click on object (here its map) of version you want, and click on Open In Workspace.

Here, all things work fine - means you will be able to view all objects in a map which belogs to that old verison, except target definition. This is a bug of informatica, that it wont show Target of that particular version but the target is always of latest version.

So, suppose you in-intentionally or un-knowingly copied your map or done some changes in map of a particular folder, and if the older version of that map got updated. Then you can view/get your old map iff the target defination is not changed.

If you have done some changes at target definition like added more columns, connected more ports to target level etc.. then there is no way to get your old version back, and you need to do your development again for earlier version.

Migration :-(

Ya, Migration was never so tedius, boaring and bugging job for me. It all depends on work you are doing. On the eve of Dipavali, I was all set to run for mumbai and never thought that simple migration will eat my time like anything.

It was a GT migration, as Nilesh was on leave it came on my head. Totally there were 7 workflows needs to be migrated. First time I saw that all workflows under particular folder are getting migrated. Though changes are in only 3 mappings not sure why do they migrate whole code :-(

Each workflow out of 7 has again 12 sessions each. Every sessions was of particular map, so 12 mapping for each session. Out of 12 mappings, 2 mappings were so big, that if you try to open those at Mapping Developer, it was getting hang. Those maps were having 15-16 pipelines each and number of tranformations. yes, that migration was really big.

Around 5 pm, started the migration, and had a plan pack the bags by 6 completing the migration and applying the label.

First Took export of all 7 workflows each and checked for verbose mode. Ya, that thing cs ppl do not like :-(. After getting clear on that, started importing one by one. As changes were not made by me, was very conscious while verifying each and every tranformations, especially targets and source. [THere is a separate nightmare of versioning, will describe it some other time]. For first successful import it took me 20 mins :). After that completed the second workflow which took another 20 mins. Clock's pointer were almost reaching 6, and this time Bikram asked me to call Nilesh again. As was already frustrated, without any hesitation I dialed Nilesh again. and asked him how much time the whole migration usually takes?

He first asked me, how you are doing the migration, one - by - one workflow? and obviouly the answer was Yes.

He started laughing, and said, first take the export by all 7 workflows simultaneously and import only one xml as mostly all trnaformations - source, targets, lookups, filters, expressiosn etc - are common.

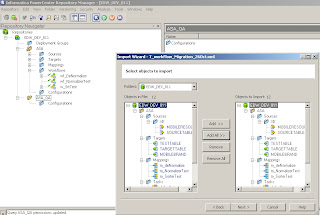

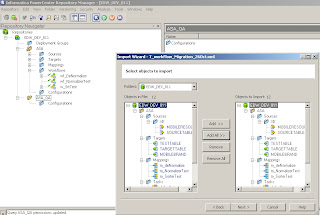

* first lesson learned - if you are migrating such large number of workflows, took export of all at same time. As below

At Repository Manager

1. Select all workflows which needs to be migrated and go to repository menu and inside that click on export.

2. Save the wolething as a single xml, give anyname you want. e.g. 7_workFlow_Migration_26Oct.xml

3. Import that xml again, into project or qa folder.

As you click on import, another window will get popup. Depending upon your requirement, select objects which you want to migrate. In my case, I had selected all objects. and click on next.

Another widow will get appear which will ask you to select the folder - source to destination. Tool is such claver, that it will pick source folder information from xml which you have exported from - hats of to the informaitca coders. For first time, destination folder will be same as source folder name - tool is still not able to read what's in your mind, wait for some more years.

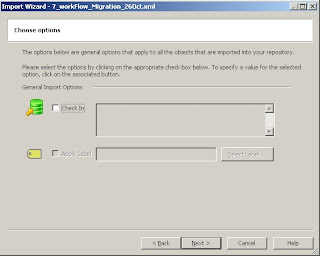

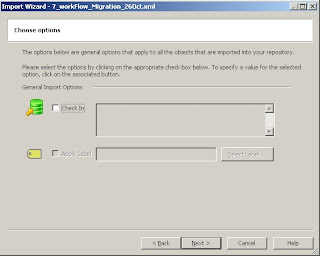

After choosing correct source and destination folders, go ahead by click next. A new Window will ask you whether you want to checkin your new objects in new folder? Now here, Its a belief - its a belief as I never tried it - that if we checkin hear, comments which we give will not appear for checked in objects. So, as per advice by our seniors, please skip this step and do not click on check in.

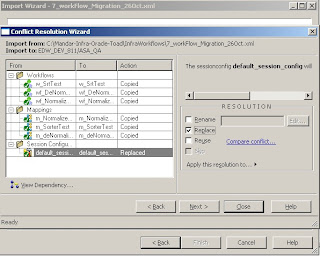

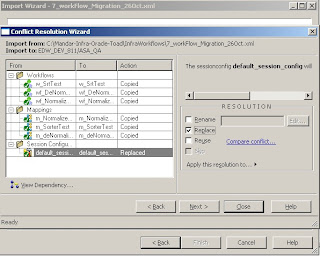

To resolve the conflicts between objects, new window will appear which will ask you to create any rule if you want. I never came across any such need, so just skip this step and click next.

To resolve the conflicts between objects, new window will appear which will ask you to create any rule if you want. I never came across any such need, so just skip this step and click next.

When you go to next window, here its an important task for decision. You need to resolve the conflicts here either you have to reuse, replace or rename the objects in new folder where you are copying into. In my case, as new folder was totally empty, its showing conflicts only for session properties.

When you go to next window, here its an important task for decision. You need to resolve the conflicts here either you have to reuse, replace or rename the objects in new folder where you are copying into. In my case, as new folder was totally empty, its showing conflicts only for session properties.

This is the last step which you will be follwing. and after it you just have to click on import button and all mappings, sessions, wokflow and tranformations inside it will get copied.

This is the last step which you will be follwing. and after it you just have to click on import button and all mappings, sessions, wokflow and tranformations inside it will get copied.

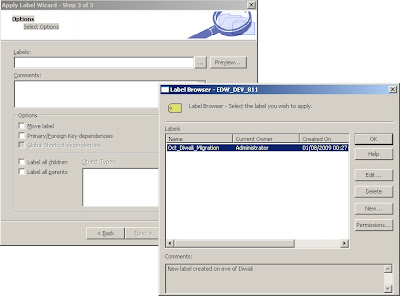

Just give appropriate label name and click on OK. imp: though at image label name is starting with number, it wont allow first character as number and it has to be alphabet. Now, you want to apply label to all workflows which you have migrated. Thought in a folder, there are only 7 workflows, you are not supposed to add label on whole folder level, reason is, there can be number of objects which are not dependant on 7 workflows, and those will get migrated un-necessarily.

Just give appropriate label name and click on OK. imp: though at image label name is starting with number, it wont allow first character as number and it has to be alphabet. Now, you want to apply label to all workflows which you have migrated. Thought in a folder, there are only 7 workflows, you are not supposed to add label on whole folder level, reason is, there can be number of objects which are not dependant on 7 workflows, and those will get migrated un-necessarily.

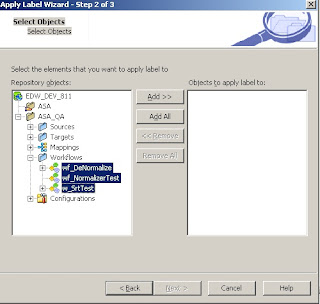

Click on Next, and window will appear where you want to select the objects for which you want to apply the label.

Click on Next, and window will appear where you want to select the objects for which you want to apply the label.

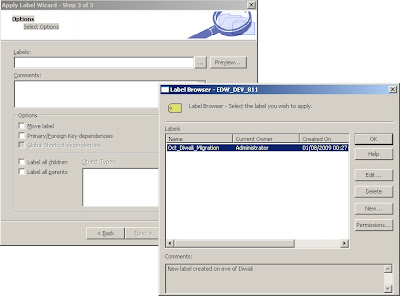

Click on Add, and again Next. New windlow will appear where you will actually select the label which you are going to apply.

Click on Add, and again Next. New windlow will appear where you will actually select the label which you are going to apply.

After selecting the required label, click on OK, give the meaningful comment, and do not forgot to check "Label All Chindren" and "Label All Parents". Click on finish and you are done with your job.

After selecting the required label, click on OK, give the meaningful comment, and do not forgot to check "Label All Chindren" and "Label All Parents". Click on finish and you are done with your job.

It was a GT migration, as Nilesh was on leave it came on my head. Totally there were 7 workflows needs to be migrated. First time I saw that all workflows under particular folder are getting migrated. Though changes are in only 3 mappings not sure why do they migrate whole code :-(

Each workflow out of 7 has again 12 sessions each. Every sessions was of particular map, so 12 mapping for each session. Out of 12 mappings, 2 mappings were so big, that if you try to open those at Mapping Developer, it was getting hang. Those maps were having 15-16 pipelines each and number of tranformations. yes, that migration was really big.

Around 5 pm, started the migration, and had a plan pack the bags by 6 completing the migration and applying the label.

First Took export of all 7 workflows each and checked for verbose mode. Ya, that thing cs ppl do not like :-(. After getting clear on that, started importing one by one. As changes were not made by me, was very conscious while verifying each and every tranformations, especially targets and source. [THere is a separate nightmare of versioning, will describe it some other time]. For first successful import it took me 20 mins :). After that completed the second workflow which took another 20 mins. Clock's pointer were almost reaching 6, and this time Bikram asked me to call Nilesh again. As was already frustrated, without any hesitation I dialed Nilesh again. and asked him how much time the whole migration usually takes?

He first asked me, how you are doing the migration, one - by - one workflow? and obviouly the answer was Yes.

He started laughing, and said, first take the export by all 7 workflows simultaneously and import only one xml as mostly all trnaformations - source, targets, lookups, filters, expressiosn etc - are common.

* first lesson learned - if you are migrating such large number of workflows, took export of all at same time. As below

At Repository Manager

1. Select all workflows which needs to be migrated and go to repository menu and inside that click on export.

2. Save the wolething as a single xml, give anyname you want. e.g. 7_workFlow_Migration_26Oct.xml

3. Import that xml again, into project or qa folder.

As you click on import, another window will get popup. Depending upon your requirement, select objects which you want to migrate. In my case, I had selected all objects. and click on next.

Another widow will get appear which will ask you to select the folder - source to destination. Tool is such claver, that it will pick source folder information from xml which you have exported from - hats of to the informaitca coders. For first time, destination folder will be same as source folder name - tool is still not able to read what's in your mind, wait for some more years.

After choosing correct source and destination folders, go ahead by click next. A new Window will ask you whether you want to checkin your new objects in new folder? Now here, Its a belief - its a belief as I never tried it - that if we checkin hear, comments which we give will not appear for checked in objects. So, as per advice by our seniors, please skip this step and do not click on check in.

To resolve the conflicts between objects, new window will appear which will ask you to create any rule if you want. I never came across any such need, so just skip this step and click next.

To resolve the conflicts between objects, new window will appear which will ask you to create any rule if you want. I never came across any such need, so just skip this step and click next. When you go to next window, here its an important task for decision. You need to resolve the conflicts here either you have to reuse, replace or rename the objects in new folder where you are copying into. In my case, as new folder was totally empty, its showing conflicts only for session properties.

When you go to next window, here its an important task for decision. You need to resolve the conflicts here either you have to reuse, replace or rename the objects in new folder where you are copying into. In my case, as new folder was totally empty, its showing conflicts only for session properties.  This is the last step which you will be follwing. and after it you just have to click on import button and all mappings, sessions, wokflow and tranformations inside it will get copied.

This is the last step which you will be follwing. and after it you just have to click on import button and all mappings, sessions, wokflow and tranformations inside it will get copied.

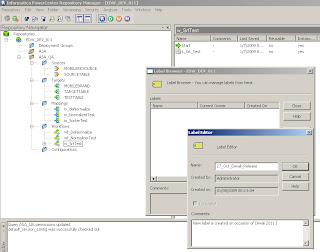

The story do not end here actually, after importing all objects, last task was to check-in all the objects with proper comment, so that we can identify what all objects we have imported.

If you observe, here ASA_QA folder is empty, even though we have imported the 3 workflows. Why? because those are not yet checked in. Now, once we check in those all objects, hopfully tool will work fine and will show the objects at QA folder.

give proper comment for objects you are checking in.

Once you checked in those, as per your expectations, tool do not work :). You need to re-connect to the repository and again need to open the QA folder. And now, you can view the things which you are expecting.

But, still my story do not end here, if you have observed, while importing, my all objects were in checked out status, but after importing and checkin in at QA, not a single object was impacted or invalid. Yes that thing you need to verify after you done with the import.

At the time of my migraion, means once I had imported all 7 workflows, one mapping was showing me as impacted. Initially I thought, because of my migration, map got impacted, so I went to map designer, checked it out, did the validation and again checked. I varified the comment just to verify if its been migrated from my user folder, and found the comment was missing. I checked for dependancing now and I could see number of dependant tranformations. "How will you check the dependancy --> just right click on map [or any object for that matter] and click on ' view dependancies..'.

Now I lost my temper, and cursying myself like anything, what went wrong I was not sure. I checked all objects [totally 130+] one by one, noted those which do not have comment which I gave while importing. Searched those missing comments objects at old user folder and found, those objects were not present. Eureka !! Got a clque, might be those sessions and mappings are not connected with those 7 workflows wich I had migrated. So, this time while checking the dependancing, I just click on workflow

Fortune favors the brave, and there were no results :). I had did my job correctly. Only thing I missed is, before importing the objects, I forgot to check if any object is checked-out, impacted or invalid in project or QA folder. Be sure you will verify that...

Now only thing, which was remaining was applying the label.

LABEL -- though not important for developers, but very crucial for ppl who are doing actual migration. Because from Stg --> QA --> Prod migration happens without humam intervation, automatically by tools like pvcs, or pada depending on company.

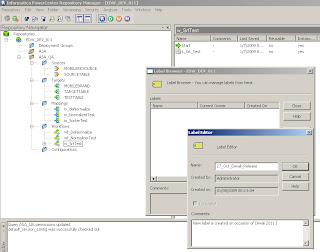

Go to versioning menu --> inside that Labels ..

After clicking on Label, new window will appear which will ask you to give lavel name which you want to create.

Just give appropriate label name and click on OK. imp: though at image label name is starting with number, it wont allow first character as number and it has to be alphabet. Now, you want to apply label to all workflows which you have migrated. Thought in a folder, there are only 7 workflows, you are not supposed to add label on whole folder level, reason is, there can be number of objects which are not dependant on 7 workflows, and those will get migrated un-necessarily.

Just give appropriate label name and click on OK. imp: though at image label name is starting with number, it wont allow first character as number and it has to be alphabet. Now, you want to apply label to all workflows which you have migrated. Thought in a folder, there are only 7 workflows, you are not supposed to add label on whole folder level, reason is, there can be number of objects which are not dependant on 7 workflows, and those will get migrated un-necessarily.

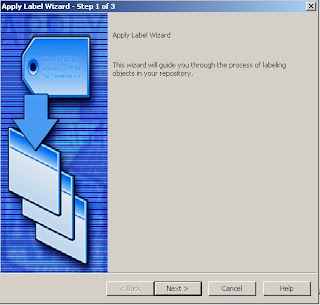

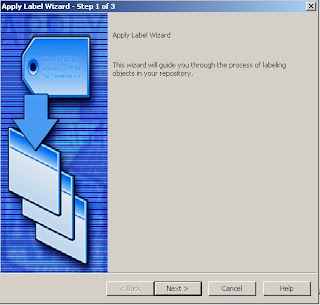

How will you apply label :- Select folder name which contains your workflows, go to Versioning -->Apply Label

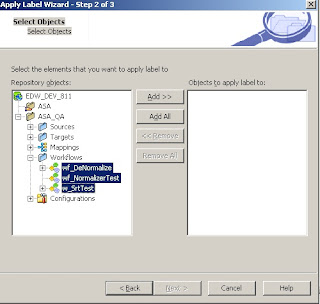

Click on Next, and window will appear where you want to select the objects for which you want to apply the label.

Click on Next, and window will appear where you want to select the objects for which you want to apply the label.

Here, do not select whole folder, but all required workflows.

Click on Add, and again Next. New windlow will appear where you will actually select the label which you are going to apply.

Click on Add, and again Next. New windlow will appear where you will actually select the label which you are going to apply. After selecting the required label, click on OK, give the meaningful comment, and do not forgot to check "Label All Chindren" and "Label All Parents". Click on finish and you are done with your job.

After selecting the required label, click on OK, give the meaningful comment, and do not forgot to check "Label All Chindren" and "Label All Parents". Click on finish and you are done with your job.

Just to verify, for how many objects label has been applied, you can create a query at informatica level.

Query :-- Go to tool at menubar and inside that click on queries..A new window will appear which will ask you to create new query as below

Give query name you want, select parameter - here I have selected as label, give the respective criteria.

And shoot on blue arrow. You will get to see all objects which which this label got applied.

Just verify how many objects you were actually migrated, and for how many objects the label got assigned. Note - label will get assigned only for latest checked in version of an object.

Before I end up - below are the things to always check at the time of migration

1. First verify at ur project/qa or developer folder any object is impacted, checkedout or invalid. If its so, first resolve that and then start for migration.

2. In case of large migration, do not do it one by one, rather take export of all worklows in single go and import all things in single go.

3. Do not checkin object while you are importing it, as comment will not appear in such cases if you do so.

4. After you imported all workflows/objects, please checkin those objects first.

5. Just re-verify if any of the object is impacted, checked out or invalid at project or qa folder.

6. Again write a query on the basis of comment, and count actual number of objects you were migrated and actualy numbr of objects for which comment has been assigned.

7. Once you confirm all above things, apply label only to those objects which you have actually migrated, be careful at this step.

8. Again write a query on basis of applied label and count number of objects again.

Hope this will help you..good luck

Saturday, October 15, 2011

Query at ReportExtract

While working on report extract, came across a situation which I thought can not be handled using simple sql query and needs to write pl-sql block; but Sandesh Birwatkar gave a simple solution.

Solution is quite simple, but I never thought of that..

As an example, below is the scenario

You have an employee table, and user will give department number and from which you need to fetch out the respective employees.

select *

from employees

where department_id in (

select distinct department_id

from departments

where department_id = '&DepID'

)

But, twist is, if department table do not have any records, query should return all employee records.

How will you do it? below is the solution

create table departmentsBKP

as

select * from departments

select *

from employees

where department_id in (

select distinct department_id

from departmentsBKP

where department_id = '&DepID'

)

union

select *

from employees

where 0 = (select count(*) from departmentsBKP)

delete from departmentsBKP

and now again if u fire select statement, you will get all records of employees table.

Solution is quite simple, but I never thought of that..

As an example, below is the scenario

You have an employee table, and user will give department number and from which you need to fetch out the respective employees.

select *

from employees

where department_id in (

select distinct department_id

from departments

where department_id = '&DepID'

)

But, twist is, if department table do not have any records, query should return all employee records.

How will you do it? below is the solution

create table departmentsBKP

as

select * from departments

select *

from employees

where department_id in (

select distinct department_id

from departmentsBKP

where department_id = '&DepID'

)

union

select *

from employees

where 0 = (select count(*) from departmentsBKP)

delete from departmentsBKP

and now again if u fire select statement, you will get all records of employees table.

Monday, October 10, 2011

Friday, September 23, 2011

Use of nls_date_language parameter in Oracle

There are some problems where your theoritical knowledge is not at all important. You need to go through such situation. Read an example below..

You have an application whose users are all over the world. You are processing some files every day i.e. each day a new files comes in and you process that using the application available. Even in that, every day you handle one set of file - say for an example on Monday you handle file A, on Tuesday you handle file B, on Wednesday you handle file C etc.

Now issue here we faced was, at India or Germany or France for a particular day files which was getting picked up by the application was not same as application was picking file at US. We checked the date at both end and it was same. So what was fishing? please read below...

Now question is, how will you identify the day of a week - yaa simple SQL Query

select to_char(sysdate,'D') from dual;

Looks pretty simple and result is quite obvious, it should be ???

We normally counts our week start day as Monday and Week end day as Sunday. so if today is 7-Jan-2009 which is wednesday, the above query will or should return 3 i.e. thid day of a week.

But this does not ends here...Please go through below queries

I have checked the nls_territory parameter value of my db, which results into AMERICA.

SQL> connect

Enter user-name: sys as sysdba

Enter password:

Connected.

SQL> show parameter nls_territory;

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

nls_territory string AMERICA

SQL>

SQL> select to_char(sysdate,'D') from dual;

T

-

4

Above query gave me the result as 4, so I varified the sysdate.

SQL> select to_char(sysdate, 'dd-mon-yyyy HH:MI:SS AM') from dual;

TO_CHAR(SYSDATE,'DD-MON'

-----------------------

07-jan-2009 01:26:14 AM

SQL> select to_char(sysdate,'D') from dual;

T

-

4

SQL>

SQL> select to_char(sysdate, 'Day') from dual;

TO_CHAR(S

---------

Wednesday

SQL>

So, it showed me that, its a 7-Jan-2009, Wednesday and Week Day is 4

Now, I changed my parameter - nls_territory - value to FRANCE

SQL> ALTER SESSION SET NLS_TERRITORY = France;

Session altered.

I again verify my above values

SQL> select to_char(sysdate,'D') from dual;

T

-

3

SQL> select sysdate from dual;

SYSDATE

--------

07/01/09

SQL> select to_char(sysdate, 'dd-mon-yyyy HH:MI:SS') from dual;

TO_CHAR(SYSDATE,'DD-'

--------------------

07-jan-2009 01:22:06

SQL> select to_char(sysdate, 'Day') from dual;

TO_CHAR(S

---------

Wednesday

What does it means?

For 7-Jan-2009, wednesday, if US is a territory Day of a week is 4 but if FRANCE as a territory Day of a week is 3; mmm quite weired, isnt it?

We can try it out using nls_date_language..

SQL> alter session set nls_date_language=GERMAN;

Session altered.

SQL> select to_char(sysdate, 'Day') from dual;

TO_CHAR(SY

----------

Mittwoch

SQL> alter session set nls_date_language=AMERICA;

ERROR:

ORA-12705: Cannot access NLS data files or invalid environment specified

SQL> alter session set nls_date_language=AMERICAN;

Session altered.

SQL> select to_char(sysdate, 'Day') from dual;

TO_CHAR(S

---------

Wednesday

SQL>

This means that, even if we try using Day instead of D, results are different in both cases i.e at US and at Germany.

Try using below now...

SQL> alter session set nls_date_language = GERMAN;

Session altered.

Solution to this problem is, when you are calculating such values always use below to_char function

SQL> select to_char(sysdate, 'Day', 'nls_date_language = GERMAN') from dual;

TO_CHAR(SY

----------

Mittwoch

SQL> select to_char(sysdate, 'Day', 'nls_date_language = AMERICAN') from dual;

TO_CHAR(S

---------

Wednesday

SQL>

Yesss, this works,

So, the thumb rule is, where ever you are located, if you are working with date and timezones are different, be specific in forming a query and use nls_date_language of your query same globally. as mentioned below.

We do not know, why week day at US starts from Sunday and rest of the world from Monday.

You have an application whose users are all over the world. You are processing some files every day i.e. each day a new files comes in and you process that using the application available. Even in that, every day you handle one set of file - say for an example on Monday you handle file A, on Tuesday you handle file B, on Wednesday you handle file C etc.

Now issue here we faced was, at India or Germany or France for a particular day files which was getting picked up by the application was not same as application was picking file at US. We checked the date at both end and it was same. So what was fishing? please read below...

Now question is, how will you identify the day of a week - yaa simple SQL Query

select to_char(sysdate,'D') from dual;

Looks pretty simple and result is quite obvious, it should be ???

We normally counts our week start day as Monday and Week end day as Sunday. so if today is 7-Jan-2009 which is wednesday, the above query will or should return 3 i.e. thid day of a week.

But this does not ends here...Please go through below queries

I have checked the nls_territory parameter value of my db, which results into AMERICA.

SQL> connect

Enter user-name: sys as sysdba

Enter password:

Connected.

SQL> show parameter nls_territory;

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

nls_territory string AMERICA

SQL>

SQL> select to_char(sysdate,'D') from dual;

T

-

4

Above query gave me the result as 4, so I varified the sysdate.

SQL> select to_char(sysdate, 'dd-mon-yyyy HH:MI:SS AM') from dual;

TO_CHAR(SYSDATE,'DD-MON'

-----------------------

07-jan-2009 01:26:14 AM

SQL> select to_char(sysdate,'D') from dual;

T

-

4

SQL>

SQL> select to_char(sysdate, 'Day') from dual;

TO_CHAR(S

---------

Wednesday

SQL>

So, it showed me that, its a 7-Jan-2009, Wednesday and Week Day is 4

Now, I changed my parameter - nls_territory - value to FRANCE

SQL> ALTER SESSION SET NLS_TERRITORY = France;

Session altered.

I again verify my above values

SQL> select to_char(sysdate,'D') from dual;

T

-

3

SQL> select sysdate from dual;

SYSDATE

--------

07/01/09

SQL> select to_char(sysdate, 'dd-mon-yyyy HH:MI:SS') from dual;

TO_CHAR(SYSDATE,'DD-'

--------------------

07-jan-2009 01:22:06

SQL> select to_char(sysdate, 'Day') from dual;

TO_CHAR(S

---------

Wednesday

What does it means?

For 7-Jan-2009, wednesday, if US is a territory Day of a week is 4 but if FRANCE as a territory Day of a week is 3; mmm quite weired, isnt it?

We can try it out using nls_date_language..

SQL> alter session set nls_date_language=GERMAN;

Session altered.

SQL> select to_char(sysdate, 'Day') from dual;

TO_CHAR(SY

----------

Mittwoch

SQL> alter session set nls_date_language=AMERICA;

ERROR:

ORA-12705: Cannot access NLS data files or invalid environment specified

SQL> alter session set nls_date_language=AMERICAN;

Session altered.

SQL> select to_char(sysdate, 'Day') from dual;

TO_CHAR(S

---------

Wednesday

SQL>

This means that, even if we try using Day instead of D, results are different in both cases i.e at US and at Germany.

Try using below now...

SQL> alter session set nls_date_language = GERMAN;

Session altered.

Solution to this problem is, when you are calculating such values always use below to_char function

SQL> select to_char(sysdate, 'Day', 'nls_date_language = GERMAN') from dual;

TO_CHAR(SY

----------

Mittwoch

SQL> select to_char(sysdate, 'Day', 'nls_date_language = AMERICAN') from dual;

TO_CHAR(S

---------

Wednesday

SQL>

Yesss, this works,

So, the thumb rule is, where ever you are located, if you are working with date and timezones are different, be specific in forming a query and use nls_date_language of your query same globally. as mentioned below.

We do not know, why week day at US starts from Sunday and rest of the world from Monday.

Logic behind Number to Date conversion at Excel....

Number of time we have encountered, that some times our number are getting showed in date format and we simply change the format and read it as number as required.

Today while going through the code, I realized the need to understand the logic behind it.

Our source files comes in Excel format, wherein Date column (say as Purchase Date) comes in various formats e.g. 1-Jan-2011, 01-01-2011, 01-January-2011, 01/01/2011, 1-Jan-11 and 40544. All formats are readable except last one 40544.

Now question for me was, how can I convert this 40544 to date? How will my code understand that this is a number and what way should I convert this? Fortunately logic to decode this was available in other mapping.

How do the do it?

They first take out the length of a string (its a date but comes as a string port), first validates all the mentioned formats mentioned above (except number format) and at last if the nothing is true and if length is less than 6 - not sure why they check for less then 6 date; might be they want to stick only to 21st century - they apply below logic.

If we write 1 as a number of excel cell and convert that to date format it appears as 1/1/1900

i.e. for number 1 it considers as 1-Jan-1900. So if we specify 2 it will be 2-Jan-1900. Logic is we need to add that many number of days to 1-Jan-1900. If number is 40544 means 40543 days after 1-Jan-1900. This addition of 1 is not always true as there might be some leap years comes in and changing the date. If we negate 2, logic works fine

Below query will give the results in oracle --

select to_char(to_date('1-Jan-1900','dd-mon-yyyy') + 40544 - 2,'dd-mon-yyyy') from dual

Query above will give result as 02-jan-2111, which is mathcing with that of excel sheet.

In informatica the logic is as below

ADD_TO_DATE(TO_DATE('01/01/1900', 'dd/mm/yyyy'),'D',TO_INTEGER(NAME))

Add_to_Date will take 3 inputs,

1. Date in which you want to add

2. What you want to add - D/DD/Day means day. M/MM means month and Y/YY means year. Please see informatica help for further information

3. Last is how many numbers you want to add.

Wednesday, September 21, 2011

XML Source

Today after discussion with my PM , got a question in mind - how its working exactly. Though "Web Services Consumer" tranformation is not getting created at my end because of license issue, I had number of things to check for XML Tranformation

To start with I have taken XML Source

To create a XML source, you need to have a XML file. I have taken it from -- http://www.w3schools.com/xml/xml_tree.asp

<?xml version="1.0" encoding="ISO-8859-1"?>

<note>

<to>Tove</to>

<from>Jani</from>

<heading>Reminder</heading>

<body>Don't forget me this weekend!</body>

</note>

<note>

<to>Tove</to>

<from>Jani</from>

<heading>Reminder</heading>

<body>Don't forget me this weekend!</body>

</note>

Saved it at Local drive and imported XML Tranformation. While importing it, we get below options

1.You will come across such screen at the time of importing a XML definition. Keep every thing as default.

Sometimes last option "How would you like to generate names for the XML Columns" will be default as first option, select it as second option and check all the mentioned check boxes.

After clicking on OK, you will get a screen asking "How would you like the XML Views to be generated?" as specified below.

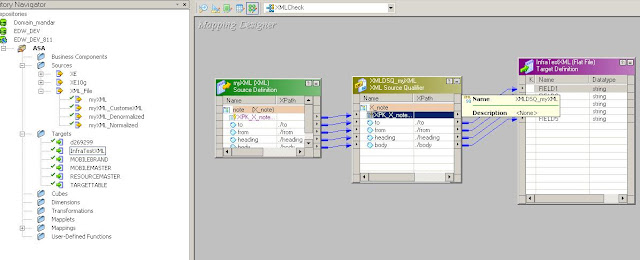

Choose any option of Entitiy Relationship and Hierarchy Relationship; structure will be same in this case. Structure may differ for rest of the option or the XML is of more complex nature.

Here, structure got pivoted. Note is the main node, that became the primary key of a xml tranformation, rest to, from, heading and body became port of the tranformation i.e. column of a table. So, main outer tag became the row and inner tags became column ultimately.

We will be connecting this XML Source to Target as CSV file.

Below is the structure of a mapping

This structure is pretty simple, only caution is at the time of connection definition at Session Level. You need to define the XML and Target file path cautiously - please see below

As in the image, we have given value of 2 parameters

Source File Directory = D:\InformaticaFiles\

Source File Name = myXML.xml

By same way you need to specify directory and name for target file.

Once you run the map, target file will have one row inserted as below.

Below is the out-put of target file. Note, while generating the target, create dummy csv file with 5 columns specified with 30 as a each column size.

=================================================================

Now we added 2 more pipelines - so there are not totally 3 pipelines. First will have Normalized XML, second will have De-Normalized and the last one is created with option "Do not generate XML View". We will see regarding how to generate XML Source using "Do not generate XML View option", but if you notice at all 3 source definition, first 2 has one extra port - XPK_X_Note - which is also a primary key. This extra port is missing at 3rd definition which is created with "Do not generate XML View option". We will discuss on how to create XML Source using Do not generate xml view option later.

When we define same target file for all 3 targets, only 1 record get inserted - might be every pipeline will update the same record. So, we defined 3 separate files for each target, first 2 files have unique value for <node> got inserted, third file is missing that particular column.

First port - offcouse for first 2 sources - will generate a unique number for every run. In this case - second pipeline was not exists for first few runs. So, second XML Source count is slightly lacking behind the first source. What we have to find out is, if we dis-connect from repository, still that value persist?

Hmm, dis-connected from repository and after re-connecting it ran the workflow. Concluded values are persistent, might be getting saved at database against workflow run. Not sure how to re-set these values.

One more experiment I did was, I changed the XML Source file now as below

<?xml version="1.0"?>

<note>

<to>Tove</to>

<from>Jani</from>

<heading>Reminder</heading>

<body>Don't forget me this weekend!</body>

<to>staksale</to>

<from>magogate</from>

<heading>Reminder</heading>

<body>Meet tomorrow morning at 10</body>

<to>Tove</to>

<from>asjaiwa</from>

<heading>magogate</heading>

<body>Meet tomorrow morning at 10</body>

</note>

Now there are totally 3 records inside <node>, but when we run the workflow, it saved only first record of Jani.

This means that, our XML Source is defined to read only below structure

<note>

<to>Tove</to>

<from>Jani</from>

<heading>Reminder</heading>

<body>Don't forget me this weekend!</body>

</note>